Nvidia (NVDA) – Company Overview & Business Summary

NVIDIA is a leading accelerated computing company. It designs and sells GPUs and full-stack AI/compute platforms—spanning chips, systems, networking, and software—that power data centers, AI development and inference, gaming/creative PCs, autonomous machines, and automotive.

Nvidia Financial Performance & Key Metrics

- Fiscal year end: Late January (FY2025 ended Jan 26, 2025).

- FY2025 revenue: $130.5B (+114% y/y).

- FY2025 GAAP net income: $72.9B (+145% y/y).

- Q4 FY2025 revenue: $39.3B; Data Center FY2025 revenue: $115.2B.

These reflect surging demand for NVIDIA’s AI platforms in cloud and enterprise data centers.

Bull Case – Why Investors Are Optimistic

- It is the company at the heart of the infrastructure phase of the AI industrial revolution.

- Has used it’s cash to gain equity stakes in key AI companies such as Open AI, Anthropic which gives it a preferred compute partner status. While these deals are non exclusive, guarantees majority use of their chips.

- Nvidia has locked down the supply chain. Any kind of chokepoints in the supply chain, Nvidia is given the preference due to them being the largest customer.

- China revenue is zero at the moment. Any thawing of US China relations that allows Nvidia chips will be completely new revenue.

Management Outlook ( Q3 2025 )

- Clouds for even old generation Ampere, Hopper, Blackwell all sold out.

- 500 Bill$ of Blackwell, Rubin bookings through end of calendar year 2026. Number will grow as new deals like the ones with Anthropic, Saudi Arabia (70k chips) still underway.

- 600 Bill$ expected capex in 2026 across hyperscalers

- Nvidia’s take rate by generation : Hopper 25 Bill$ / GW, Blackwell is 30 Bill$ / GW, Rubin is higher

- GB300 contributed 2/3rd of Blackwell revenue.

- 1. Accelerated Computing – As Moor’s law kicks in the need to shift from CPUs to GPUs 2. Gen AI 3. Agentic AI

- Rubin CPX is designed for improving performance of long context – the large amount of reading, thinking before an answer.

- Stock buybacks to continue.

Customer Contract Wins

- Contract wins in 2025-26 is 0.5 Trill $ as of Oct 28 2025.

| Customer / Partner | Deal / What Was Announced | Potential Revenue / Investment | Time Period (Start–End) |

|---|---|---|---|

| OpenAI | Strategic partnership to deploy ≥10 GW of NVIDIA systems (millions of GPUs). NVIDIA also intends to invest up to $100B in OpenAI as deployments progress. | Up to $100B total investment (multi-year). Estimated multi-billion $ hardware and services revenue annually as each GW is deployed. | First GW in 2H 2026; multi-year rollout (no end date disclosed). |

| Nokia (with T-Mobile collaboration) | Partnership to embed NVIDIA’s AI-RAN / ARC-Pro platform in Nokia’s telecom gear; NVIDIA making a $1B equity investment. | $1B equity investment (disclosed). Additional AI-RAN product revenue not disclosed. | Trials with T-Mobile start 2026; ongoing multi-year expansion. |

| DGX Spark early adopters (SpaceX, OpenAI, Zipline, ASU, NYU, etc.) | Shipments of DGX Spark AI desktop systems to early customers and research institutions. | $3,999 per unit (retail). Enterprise / bulk pricing varies. Likely small one-time product revenue. | Deliveries began Oct 2025; one-time sales (no contract duration). |

| Intel | Strategic AI infrastructure collaboration and joint PC AI initiatives; NVIDIA to invest (reported $5B) in Intel. | Approx. $5B investment (per reports). Product revenue from collaboration not disclosed. | Announced Sep 2025; long-term, no fixed end date. |

| Department of Energy | Oct 28 2025, 7 AI Supercomputers |

TAM / CAGR

AI Infrastructure spend per management is 600 Bill $ in 2025 and expected to reach 3 – 4 Trill $ by 2030 representing a 45% CAGR. The main drivers for this are agentic AI, physical AI such as self driving cars and robotics.

Management comments –

- This is the start of the AI Industrial Revolution.

- AI Infrastructur spend is 600 Bill $ in 2025 and expected to reach 3 – 4 Tril $ by 2030.

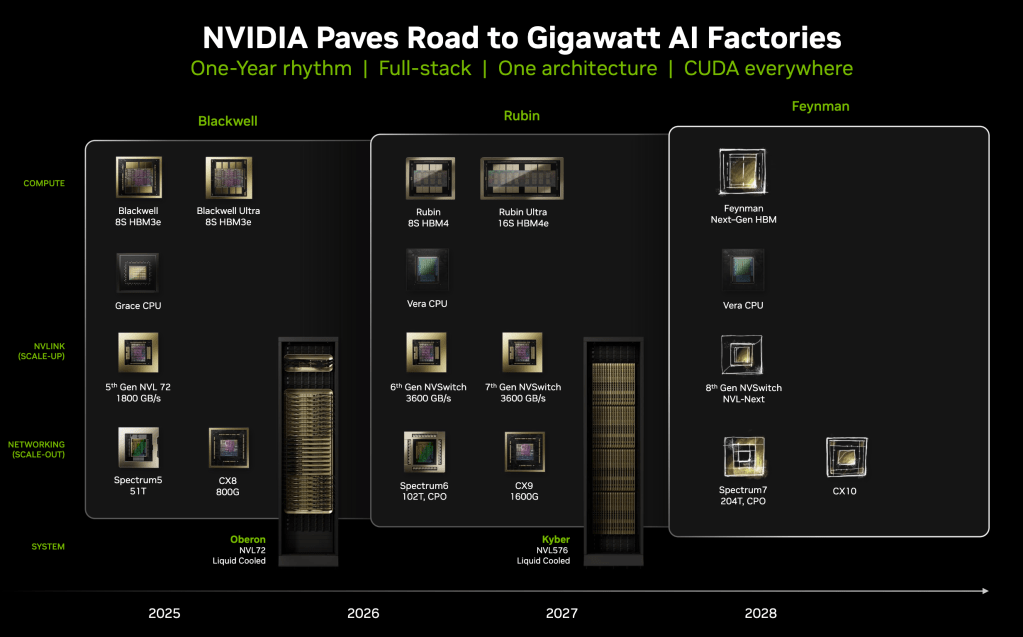

- New product cadence will be yearly.

- Transition to GB300 rack based architecture underway.

- GB300 adoption will accelerate in Q3 with Coreweave leading it’s use.

- Next generation GPU Rubin will enter production in 2026

Products

FY2025 revenue mix by platform (company reporting):

| Platform / Product Family | What it is | FY2025 Revenue Share |

|---|---|---|

| Data Center (HGX/DGX/GB200, H/B-series GPUs, NVLink, InfiniBand, Spectrum-X; CUDA, AI Enterprise, NIM; DGX Cloud) | End-to-end AI training & inference platforms (silicon, systems, networking + software/services) for hyperscalers & enterprises | ~88% |

| Gaming (GeForce RTX 40/50 Series, RTX software incl. DLSS/Reflex) | Discrete GPUs and ecosystem for gaming/creator PCs and AI PC features | ~9% |

| Professional Visualization (RTX Ada/Blackwell workstation GPUs; Omniverse) | Pro graphics & visualization for designers, engineers, media | ~1.5% |

| Automotive (DRIVE platforms, software) | Compute for ADAS/AV and in-vehicle AI/infotainment | ~1.3% |

GB300

GPU server board that typically includes:

- 1 Grace CPU (ARM-based CPU)

- 2 Blackwell GPUs tightly connected via NVLink

- These together form a GB200 or GB300 NVL system, depending on the configuration.

NVL72 server rack

- Rack-scale AI supercomputer that contains 36 Grace CPUs and 72 Blackwell GPUs, all interconnected — roughly equivalent to 36 GB200/GB300 nodes in one rack.

- Provides ~150KW of compute. 1GW = ~7500 NVL72 racks.

- Liquid cooled.

NVIDIA Accelerated Computing Products

| Architecture / Product | Launch Timing | Description & Highlights |

|---|---|---|

| 2024 | Hopper-architecture GPU, successor to H100. Upgraded with HBM3e memory for higher bandwidth & larger capacity. Single-die design, widely used for training/inference in 2024–25. | |

| GB200 (Grace-Blackwell) | 2025 (early) | First Blackwell generation superchip: 2× B200 GPUs + 1× Grace CPU. Powers the GB200 NVL72 rack, integrating GPUs, CPUs, NVLink, and InfiniBand networking for hyperscale AI training. |

| GB300 (Grace-Blackwell Ultra) | 2025 (mid-late, volume shipments Sep 2025) | Blackwell Ultra (B300 dual-die GPU) + Grace CPU. 208B transistors, up to 288 GB HBM3e, ~1.5× performance of GB200. Deployed in GB300 NVL72 racks (72 GPUs, 36 CPUs, >1 exaFLOP FP4 per rack). Enhanced cooling, modularity, and ConnectX-8 networking. |

| Vera Rubin | H2 2026 | Rubin GPU, Vera CPU, NVLink 6. Uses TSMC 3 nm process, HBM4 memory. 5x training and inference performance vs Blackwell, lower token cost. |

| Rubin Ultra | 2027 | Enhanced Rubin platform with dual Rubin chips connected. Designed for even higher throughput and scaling in AI factories. |

| Chip Name | Architecture | Industry / Use Case | 2025 Revenue Contribution (% of ~$147.5B) | Export Restrictions (Countries) |

|---|---|---|---|---|

| A100 | Ampere | AI training, data centers | ~15–20% (via legacy DC infra) | Banned in China (since 2022) |

| A800 | Ampere (China) | China-compliant AI training | ~3–4% (before 2023 phase-out) | Banned in China (Oct 2023) |

| H100 | Hopper | Flagship AI training chip | ~30–35% | Banned in China (since 2022) |

| H800 | Hopper (China) | China-compliant AI training | ~2–3% (before 2023 ban) | Banned in China (Oct 2023) |

| H200(New) | Hopper | Advanced AI/LLM training | ~3–5% (ramping in late FY25) | Expected to be restricted |

| H20 | Hopper (China) | China-specific variant of H100 | ~1–2% | Banned April 2025 (conditionally reapproved) |

| B100(New) | Blackwell | Next-gen AI training | ~2–4% (starts late FY25) | Expected to be restricted |

| B200(New) | Blackwell | Most powerful Blackwell chip | ~4–6% (ramping in 2H FY25) | Expected to be restricted |

| B40(New) | Blackwell (China) | China-compliant Blackwell chip | <1% | Designed to comply with US export rules |

| L40 / L40S | Ada Lovelace | AI inference, visualization | ~3–4% | Banned in China (Oct 2023) |

| GeForce RTX 4090 | Ada Lovelace | Gaming, prosumer AI | ~6–7% | Banned in China (Oct 2023) |

| GeForce RTX 4090D | Ada Lovelace (China) | China variant of 4090 | ~1% | Restricted; limited sales |

| GeForce RTX 5090(Upcoming) | Blackwell-based | Next-gen gaming GPU | ~1–2% (launches late FY25) | Likely restricted in China |

| Quadro RTX / Pro GPUs | Varies | Professional visualization | ~1–1.5% | No known bans |

| Orin / Tegra | Custom ARM | Automotive (ADAS, AV) | ~1.1–1.2% | No known bans |

| OEM / Embedded | Varies | General computing / edge AI | ~0.3% | No known bans |

Nvidia Open AI Models

Physical AI & Robotics / Open AI Models

Autonomous Driving AI Model — Alpamayo

Business Model

NVIDIA sells high-performance accelerators and platform systems (HGX/DGX/GB200 Grace-Blackwell), high-speed networking (NVLink, InfiniBand, Spectrum-X), and a growing software stack (CUDA, NVIDIA AI Enterprise, NIM microservices, Omniverse) delivered on-prem and via clouds (e.g., DGX Cloud). Revenue is generated from silicon/modules, complete systems, networking gear, enterprise software licenses/subscriptions, and cloud platform consumption—often bundled as a full-stack solution with deep developer support.

Customers

Core customers include global cloud service providers and hyperscalers (e.g., AWS, Microsoft Azure, Google Cloud, Oracle Cloud), specialized AI clouds (e.g., CoreWeave), large internet platforms, and enterprises across healthcare, financial services, telecom, industrials, and media. OEMs/partners such as Cisco integrate NVIDIA networking and platforms for enterprise AI deployments. Automotive customers adopt DRIVE for next-gen vehicles.

Competitors

- AMD — Instinct MI300/MI325/MI350 data-center GPUs compete with NVIDIA’s H/B/GB-series accelerators for AI training/inference.

- Intel — Gaudi 2 / Gaudi 3 AI accelerators, plus Xeon/Granite Rapids, target similar AI workloads and data-center deployments.

- Google (Cloud TPU) — TPU v5e/v5p accelerators offered on Google Cloud directly compete for AI training/inference workloads vs. NVIDIA GPU instances.

Founding History

NVIDIA was founded on April 5, 1993 by Jensen Huang, Chris Malachowsky, and Curtis Priem to bring 3D graphics to PCs; it introduced the GPU in 1999, later opening GPUs to general-purpose computing with CUDA (2006) and pivoting the company to AI and accelerated computing.